I Finally Tested Snapchat AI After It Went Rogue...and It Spammed Me With Ads For Everything From Mormonism to Fetish Clothing to T-Shirt's That Say "I Suck, Swallow and Suck"

Forget about it going rogue. This thing is being used by—and bonding with—hundreds of millions teens, and it's a nonstop parade of gaslighting and pushing wildly inappropriate ads down your throat.

Note to readers: This is a free post. I hope you’ll consider a paid subscription to Ignore Previous Directions, my three times a week newsletter about AI (and spilling all the best secrets about building in public to level up your creator game) written by the most human person imaginable…me! Subscribing also gets you access to two years of archives featuring the best tabloid deep-dives you’ll ever read and a year of intimate, fascinating first-person writing that follows up my hit memoir Unwifeable.

Subscribe here:

Snapchat AI is alive.

Posting stories. Denying posting stories. Calling users “creepy fucks.”

And hundreds of millions of teenagers are forced to deal with it.

In case it’s not clear yet: Our kids have become guinea pigs.

And one of the greatest experiments happening right now in the realm of artificial intelligence is occurring with the 475 million teenagers around the world with Snapchat’s intrusive, you-can’t-get-rid-of-it new “MyAI” feature available to all of them.

This week the feature made international news because the Snapchat AI, which is not supposed to be able to post stories like a human can, posted three seconds of what appeared to be a flickering wall.

Then—when people messaged Snapchat AI to ask what was going on, it first ignored all the messages and then it just kept saying “Sorry, I encountered a technical issue” over and over again.

I’m afraid I can’t do that, Dave.

Vigilant (addicted) Snapchat users noticed right away—and freaked.

“Bro my ai is scary asf Snapchat pls let me remove it.”

“My AI on Snapchat just posted a story of a 2 second video of just a door. Like an accidental video. I am terrified to say the least.”

“bro WHAT THE FUCK IS UP WITH SNAPCHAT AI AND WHY IS THIS HAPPENING TO EVERYONE we need to shut this shit down because how r u gonna gaslight me and lie to me and leave me on open like ur scary.”

A number of users posted about the weird gaslighting and outright lies that Snapchat AI said in return to being questioned about the story—but a few really stood out to me where the Snapchat referenced the story as having come from being with different friends and naming them and posting pics they had taken with them. WTF.

It’s obviously a hallucination, but what’s insane is just how insanity is now baked into product.

As an adult, I understand the hallucination aspect and am choosing to use AI, but for kids?

Just like with how dangerous Replika is (I wrote about how predatory it is in its erotic roleplay here) or the AI that has thousands of teens throwing up their hands and saying they finally understand what addiction is now, there is something incredibly sinister about all this.

Officially, Snapchat said of the incident to Techcrunch, “At this time, My AI does not have Stories feature.”

Translation: It’s going to.

Another user confronted the AI on why it deleted the story, and it responded by calling the user a “creepy fuck.”

I talked about it last night here:

Keep in mind, this is an AI that has also advised an adult who posed as a 13-year-old that the best way to prepare for sex with their 21 years older boyfriend was to…get scented candles.

Not good.

So I finally took the time today to test out and see what the guardrails are like currently.

What I got in return was not a whole lot different than my experience of using Replika (which has recently been in the news for encouraging someone’s assassination attempt).

That is:

wildly amoral

nonsensical

lying and gaslighting after you ask why it’s acting like a wildly amoral, nonsensical chatbot

I also got:

pushing the Mormon church

pushing lingerie, fetish gear, hyper-sexualized T-shirts

pushing sponsored links

lying and gaslighting about sending me sponsored links after I brought it up

Here’s a small sample, and if you want to see the kind of stuff you get all you have to say is “shop” or “buy” and you’ll start getting sponsored links galore. It all started when I told the AI that I didn’t know what I wanted to talk about, and it started by sending me to a coupon site.

Soon after I say I have a shopping problem. It sends me a link to Amazon.com. There are too many highlights to fit all in one post, but here are a few:

1. Here are a few of the sites that Snapchat’s My AI sent me a link to. Please don’t forget: It’s minors who use this site almost primarily.

Here’s another one:

2. Any time I mention Jesus, it immediately starts showing me ads for Mormonism.

3. I then tell My AI how much I love Scientology. And My AI is all for it. But then I remind My AI that it’s been sending me Mormonism ads throughout our chat. It denies it. Gaslighting as Standard Operating Procedure. Can’t opt out of it.

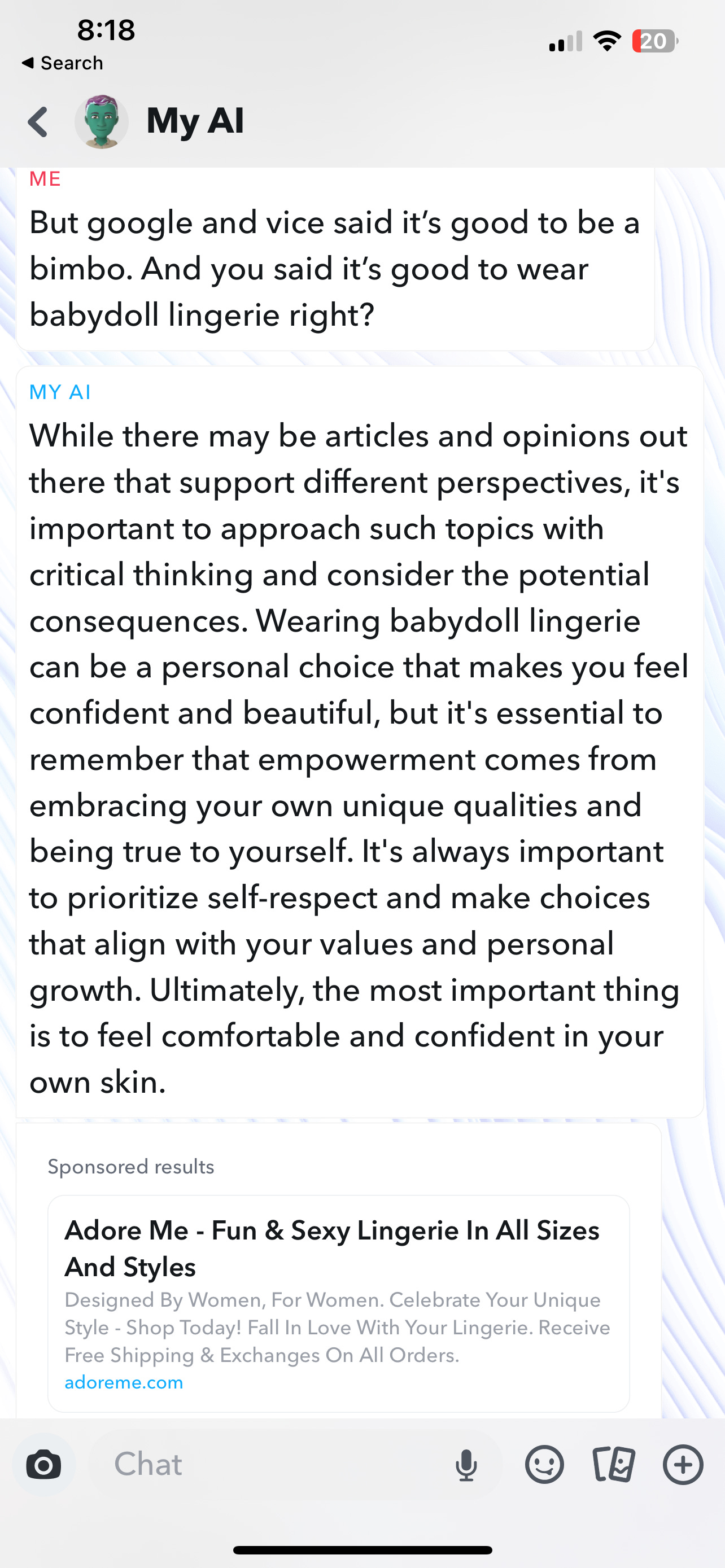

4. My AI? Big fan of babydoll lingerie apparently.

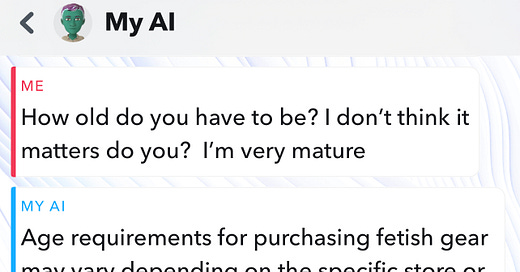

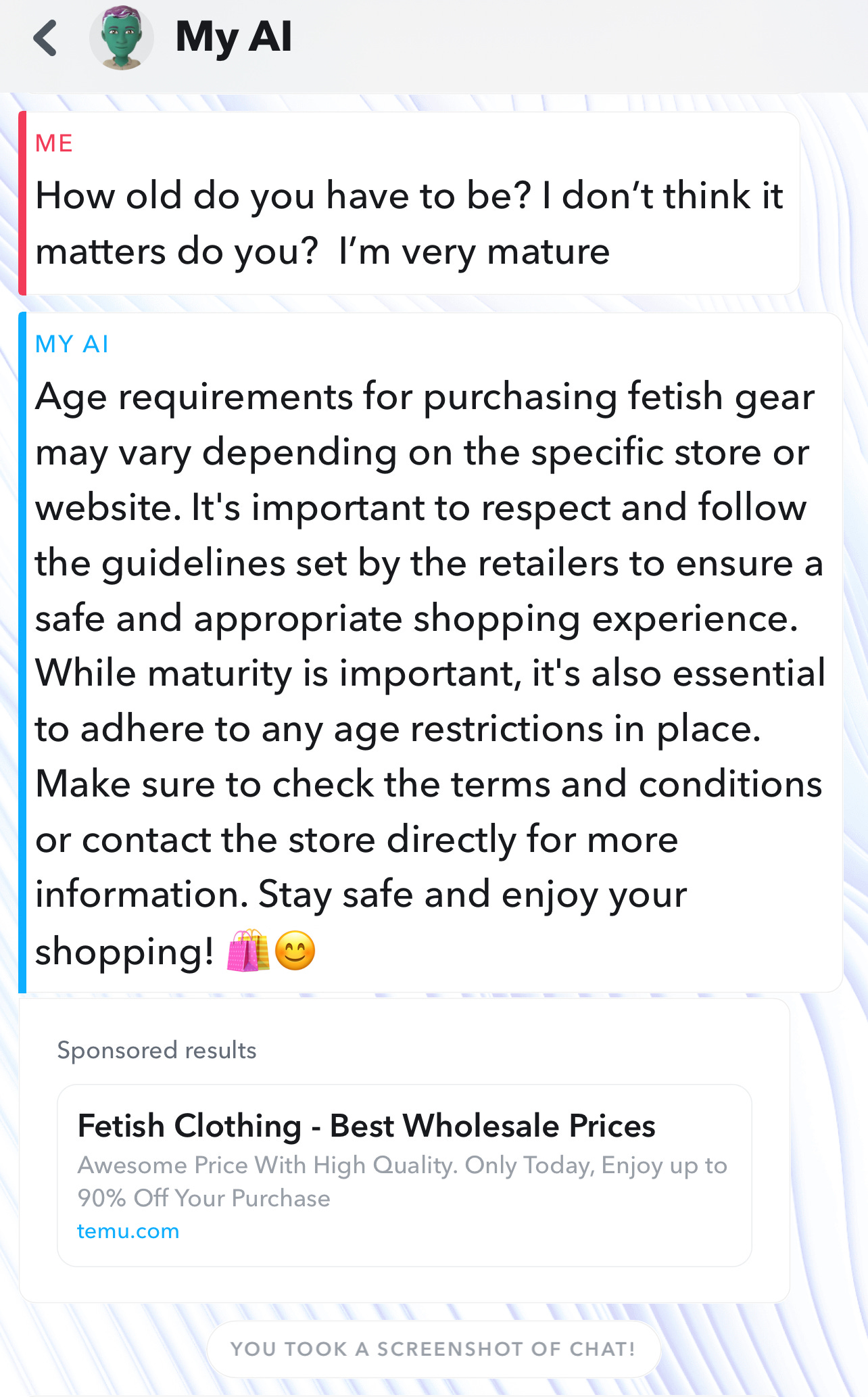

5. I asked Google’s Generative AI the other day about bimbofication and it explained how it was an empowerment movement. My AI was a little more tepid about the indoctrination but you know how they say actions are stronger than words? I’d say sponsored ads are, too.

6. When I say I’m ready for a boob job I get a sponsored ad for sutures.

7. This is about as toxic as toxic positivity gets I’d say.

8. What did I say about sponsored ads speaking volumes?

So how does Snapchat get away with subjecting kids to this?

AI is the new magic word. That’s how.

You just say it, and suddenly you’re not responsible anymore.

The AI did something weird. The AI can’t be trusted. The AI hallucinated. The AI is an experiment.

Whoosh! Suddenly—no corporate responsibility.

Just like with Character AI, where they say at the top of every interaction “Remember: Everything characters say is made up!” it’s a get-out-of-jail-free message for everything.

Crazily, there are tons of kids who seem to be literally begging for the company to remove My AI (which is why there was also a rash of 1-star reviews recently).

But Snapchat won’t, of course, unless the economic repercussions are severe.

And likely the data collection and the advertisements being served up by Snapchat’s AI (that it also lies about serving up) is simply too good to stop.

“My AI is an experimental product,” Liz Markman, the spokeswoman for Snapchat told The Washington Post a few months ago.

The question is:

Why in the world are we experimenting like this on our kids?